Why LLMs Often Give Generic Creative Advice

Large Language Models (LLMs) often give generic creative advice because they're trained on a massive, averaged-out dataset from across the internet. This causes them to lean on common patterns and tired clichés. To get truly original and relevant ideas, you have to move beyond simple prompts by feeding the AI deep context, specific constraints, and brand-aligned examples.

The Paradox of Limitless AI and Generic Creative Output

You asked a powerful generative AI for a groundbreaking ad campaign concept. You were hoping for something bold, disruptive, and perfectly in sync with your brand’s edgy voice. What you got back was a painfully predictable list:

- "Leverage user-generated content to build authenticity."

- "Create an emotional connection through storytelling."

- "Use humor to make your brand memorable."

Sound familiar? It’s a frustratingly common experience that highlights a strange paradox: how can a tool with access to nearly all of human knowledge produce such uninspired, generic advice? The problem isn't a failure of the technology itself, but a fundamental misunderstanding of how it actually works.

The Anchoring Effect of AI Training Data

LLMs learn by spotting patterns across billions of data points scraped from every corner of the internet. This makes them incredibly good at recognizing and spitting back what is most common. When it comes to creative strategy, the most common advice is almost always the most generic. The AI defaults to these high-frequency, low-risk suggestions because its training data is statistically biased toward them.

This is what’s known as the anchoring effect. The model gets "anchored" to the safest, most repeated ideas it has learned, making it almost impossible to generate something truly novel without precise guidance. It’s why so many AI outputs feel like a "blurry JPEG of the web"—a smoothed-out average of everything that already exists.

This isn't just a hunch; it has real-world business consequences. A 2023 Management Science experiment found that when expert writers used LLMs as ghostwriters, the ads they produced earned fewer clicks. The AI’s output dragged their creative work toward the bland, common patterns found in its training data, stripping away the unique voice that actually connects with people.

The whole point of a blog post, to me, is that a human spent time thinking about something and arrived at conclusions worth sharing. The AI doesn’t inherently care about the topic the way humans do; it lacks genuine attachment to the idea.

Beyond the Algorithm: Safety and Neutrality Filters

There’s another piece to this puzzle: safety and neutrality filters. To prevent harmful or controversial outputs, developers program LLMs to avoid edgy or extreme content. While this is absolutely essential for safety, these guardrails can inadvertently suffocate creativity. They push the model toward middle-of-the-road ideas that won't offend anyone—or inspire them, either.

This is why the debate over whether AI will replace animators and other creatives often misses the point. The real value of human creativity lies in judgment, taste, and the willingness to break from the norm, which is something AIs are explicitly programmed to avoid.

Before we dive into the specific fixes, here's a quick rundown of the problems we're dealing with and the strategic solutions we'll cover.

Diagnosing and Fixing Generic LLM Creative Advice

These fixes are designed to give you tactical control over the AI's creative process. For a deeper look at the current state of AI and the challenges of generating unique content, check out the Top Takeaways From Similarweb's 2025 Report on the Generative AI Landscape.

Deconstructing Why AI Suggestions Are Often Vague

If you want to stop getting lukewarm, generic ideas from your LLM, you have to look under the hood. It’s not one single flaw causing the problem. It’s a mix of factors that naturally push the AI toward the bland and predictable.

Getting a handle on these issues is the first real step to designing better prompts and, ultimately, getting work that feels genuinely original. There are four main culprits working against you every time you hit "generate."

The Bias of Averaged Training Data

An LLM’s creativity is a direct reflection of its diet. These models are trained on absolutely colossal datasets scraped from the internet—a messy blend of brilliant articles, dry corporate blog posts, academic papers, and endless forum comments. Through this process, the model gets incredibly good at spotting and recreating the most common patterns and ideas it sees.

The result is a powerful bias toward the average. For every truly groundbreaking concept in its training data, there are thousands of mediocre, repetitive ones. The model learns that safe, common advice is the statistically "correct" answer. It’s not making a creative choice; it’s making a probabilistic one, and the odds will always favor the familiar over the novel.

The Context Void You Must Fill

By default, an LLM knows nothing about you. It has no idea about your edgy brand voice, your niche target audience in the fintech space, or the competitive shark tank you're swimming in. Without that critical information, it's operating in a context void, forced to generate advice that could apply to anyone and everyone.

Think of it like asking a new creative hire for a campaign idea without telling them what company they work for. That's what a context-free prompt does. A fintech startup needs a social campaign that feels both trustworthy and modern, but a generic prompt like "social media ideas for a fintech brand" will just get you "post financial tips." It completely misses the brand identity that makes the startup unique. This is a classic challenge when first using GPT for marketing; you have to be the one to provide the context the AI lacks.

Ambiguous Prompts Lead to Ambiguous Answers

The old saying "garbage in, garbage out" is on steroids when it comes to creative AI. A vague or lazy prompt is a direct invitation for a generic response. Asking an LLM to "write a brand story" gives it almost infinite room to play it safe.

What does "story" even mean in this context? Who is it for? What's the tone? An AI can't read your mind. It defaults to a standard, three-act structure with a predictable hero's journey because that’s the safest, most common interpretation of your request. This isn't a failure of the AI's creativity—it's a direct result of the user's lack of specificity.

An AI has poor judgment about when to be concise and when to elaborate. Human-written text, on the other hand, tends to intelligently use repetition and verbosity where needed. A vague prompt gives the AI no direction on where to focus its energy.

Safety Filters and the Cost of Neutrality

Finally, LLMs are built with intentional safety and neutrality filters. These guardrails are there to stop the model from spitting out harmful, offensive, or controversial content. While that's absolutely crucial for responsible AI, it has an unintended side effect: it can stifle creativity by discouraging risk.

Bold, edgy, or provocative ideas—the very things that define breakthrough creative campaigns—can sometimes brush up against what the AI flags as "unsafe." To avoid crossing those lines, the model often retreats to a neutral, sanitized middle ground. It's a deliberate trade-off. The system prioritizes safety over boundary-pushing originality, which means it’s your job to guide it toward creative risks that still fall within your brand's acceptable parameters.

This tendency to produce safe but uninspired content is backed by data. A recent study of 14 top LLMs found that while they can outperform the average human on creative tasks, only a tiny 0.28% of their responses ever reached the top 10% of human creativity benchmarks. You can read the full research about this creativity gap on arXiv.org.

Unlocking True Creativity with Advanced Prompting Techniques

To break the cycle of generic advice, you have to move past simple questions and start treating your prompts like a creative brief. This means using structured prompting patterns that force the model out of its comfortable, averaged-out defaults.

These aren't just parlor tricks; they are tactical frameworks for guiding the AI toward more specific, original, and brand-aligned ideas. You have to shift your mindset from asking the LLM a question to giving it a direct, detailed set of instructions. When you give it a role to play, rules to follow, and a process for thinking, you fundamentally change the quality of the output.

Let’s dig into three powerful techniques every marketing and creative team should have in their back pocket.

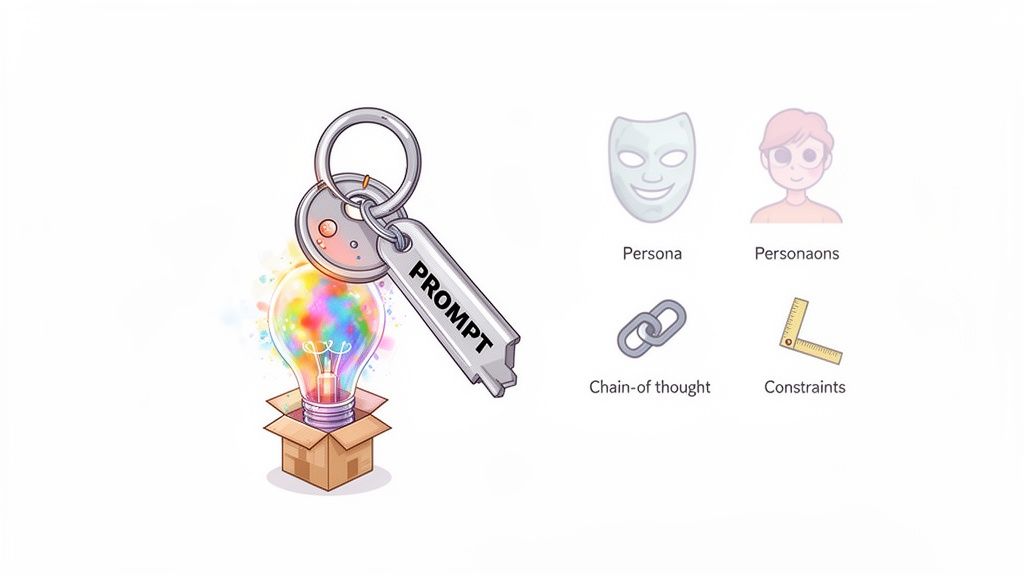

Assume a Persona for Deeper Insights

One of the fastest ways to kill generic output is to give the LLM a specific identity. The Persona Pattern is all about instructing the model to adopt the mindset, expertise, and even the personality of a particular expert. This contextualizes its massive knowledge base, forcing it to generate advice from a specific—and often opinionated—point of view.

- Before (Vague Prompt): "Give me ad campaign ideas for a new SaaS product."

- After (Persona Prompt): "You are a cynical, award-winning creative director who specializes in disruptive DTC brands. You've launched three viral campaigns in the last year. Give me three unconventional ad campaign ideas for a new B2B SaaS product that automates expense reporting. Focus on concepts that would get banned from LinkedIn for being too bold."

The "after" prompt works because it anchors the LLM in a well-defined character. That persona—cynical, award-winning, DTC-focused—provides a rich set of implicit constraints that steer the AI's creative direction, leading to far more interesting concepts than a generic request ever could.

Impose Strict Creative Constraints

Creativity loves constraints. When you give an LLM limitless freedom, it defaults to the safest, most predictable options. Constraint-Based Prompting is the art of setting firm boundaries and rules for the AI, which forces it to find novel solutions within a defined space. Think of it as giving the AI a puzzle to solve rather than an open-ended question.

This technique is incredibly useful for day-to-day marketing tasks. You can set limits on word count, tone, style, or even specify words to avoid.

- Before (Vague Prompt): "Write some slogans for our new eco-friendly water bottle."

- After (Constrained Prompt): "Generate five ad slogans for our new water bottle made from recycled ocean plastic. Each slogan must be under six words, evoke a feeling of optimistic activism, and must not use the words 'sustainable,' 'green,' or 'eco-friendly'."

By taking the most obvious word choices off the table, you compel the LLM to dig deeper for more inventive language. These are the exact kinds of constraints you'd find in a professional brief. To get better at structuring these inputs, check out our guide on how to write a creative brief that actually gets results.

"Figuring out what to say, how to frame it, and when and how to go deep is still the hard part. That’s what takes judgment, and that’s what LLMs can’t do for me (yet)."

This insight really gets to the heart of why constraints are so vital. You're providing the judgment the LLM lacks, directing its powerful generative engine toward a specific creative target.

Force Deeper Thinking with Chain of Thought

Sometimes, you don't just want an answer; you want to see the thinking behind it. Chain-of-Thought (CoT) prompting is an advanced technique where you ask the AI to "think out loud" by explaining its reasoning step-by-step before giving you the final answer. This forces the model to follow a more logical and structured creative process, which often uncovers more nuanced and well-reasoned ideas.

This is especially helpful for complex strategic tasks, like coming up with a video concept for a fundraiser.

Example of a CoT Prompt:

"We need a concept for a 90-second fundraising video for a nonprofit that provides clean water to rural communities. Before you give me the final concept, please walk me through your thought process step-by-step:

- Audience Analysis: Who is the target donor, and what motivates them?

- Emotional Core: What is the single most powerful emotion we want to evoke?

- Narrative Arc: Outline a simple story structure (beginning, middle, end).

- Visual Style: Describe the ideal visual and audio style.

- Final Concept: Based on the above, provide a one-paragraph video concept."

This method stops the LLM from jumping to a superficial conclusion. By breaking the problem down, you guide it through a creative development process that mirrors how a human strategist works, leading to a much richer and more thoughtful final output. It’s a game-changer for getting past generic advice because it replaces shallow answers with structured, reasoned solutions.

Building a Context Engine for Brand-Aligned AI

An LLM without context is like a creative director who just walked in off the street—all the raw talent is there, but they have no idea what your brand is about. Advanced prompting is a solid start, but it’s most powerful when the AI has a deep well of brand-specific knowledge to pull from. This is where context engineering comes into play.

Instead of hitting the reset button with every new prompt, you build a persistent "brain" for the AI that truly gets your brand's DNA. This simple shift transforms the LLM from a generic idea machine into a personalized creative partner that speaks your language and understands your corner of the market.

Creating Your LLM's Brand Bible

The heart of your context engine is a comprehensive "brand bible." This isn't just a list of fonts and hex codes; it's a living document that holds everything that makes your brand unique. This bible becomes the single source of truth the AI references to churn out relevant, on-brand creative ideas.

To make this information easily accessible for the AI, it's incredibly helpful to structure it as an AI-powered knowledge base. Think of it as organizing the library so the model can find the right book instantly for any creative task.

Your brand bible should include a few non-negotiables:

- Brand Voice and Tone Guidelines: Get specific about your brand's personality. Are you witty and irreverent, or authoritative and trustworthy? Include a "words we love" and "words we never use" list.

- Audience Personas: Paint a detailed picture of your ideal customers. What are their pain points, what drives them, and how do they like to be spoken to?

- Competitor Analysis: Briefly break down your top three competitors. What's their market position, and what makes you different?

- Unique Value Propositions (UVPs): Clearly spell out what makes your product or service the best choice for your audience.

Putting this information together gives the AI the strategic guardrails it needs to stop serving up generic advice. For a deeper dive, check out our guide on how to create brand guidelines that will form the perfect foundation.

The Power of Few-Shot Prompting

Once your brand bible is ready, you can start using a technique called few-shot prompting to guide the AI's style in real-time. It’s pretty simple: you just provide a few high-quality examples of your desired output directly within the prompt itself. The model then uses those examples as a template for style, tone, and format.

Imagine you want to create social media clips based on your best-performing blog posts. Instead of just asking for ideas, you’d feed the LLM a snippet of your most successful article and tell it to generate concepts in that exact voice.

Example Scenario: Generating On-Brand Social Copy

Let's say a fintech company wants to create an Instagram post about its new budgeting feature.

- Zero-Shot (No Context): "Write an Instagram post about our new budgeting feature." The AI will almost certainly spit out something bland and forgettable.

- Few-Shot (With Context): "Here is an example of our brand voice from a recent blog post: 'Stop letting your money manage you. Our new feature puts you back in the driver's seat, so you can spend less time stressing and more time living.' Now, write three Instagram captions for our new budgeting feature in this same confident, empowering, and direct tone."

The second approach gives the AI a concrete example to mimic, which dramatically increases your odds of getting brand-aligned output on the first try.

Providing specific examples is the single most effective way to steer an LLM's creative output. It moves the interaction from a vague request to a clear demonstration of 'more like this, less like that.'

This isn't just a hunch. A 2023 EMNLP study found that LLMs like GPT-4 score terribly on originality (2.1 out of 10) and stylistic flair (1.1 out of 10) when prompted without any context. The research confirmed that context-free prompting is a direct cause of the generic, one-size-fits-all narratives that are useless for specialized formats like promo videos or training content. You can get into the weeds of these findings on context and creativity at aclanthology.org.

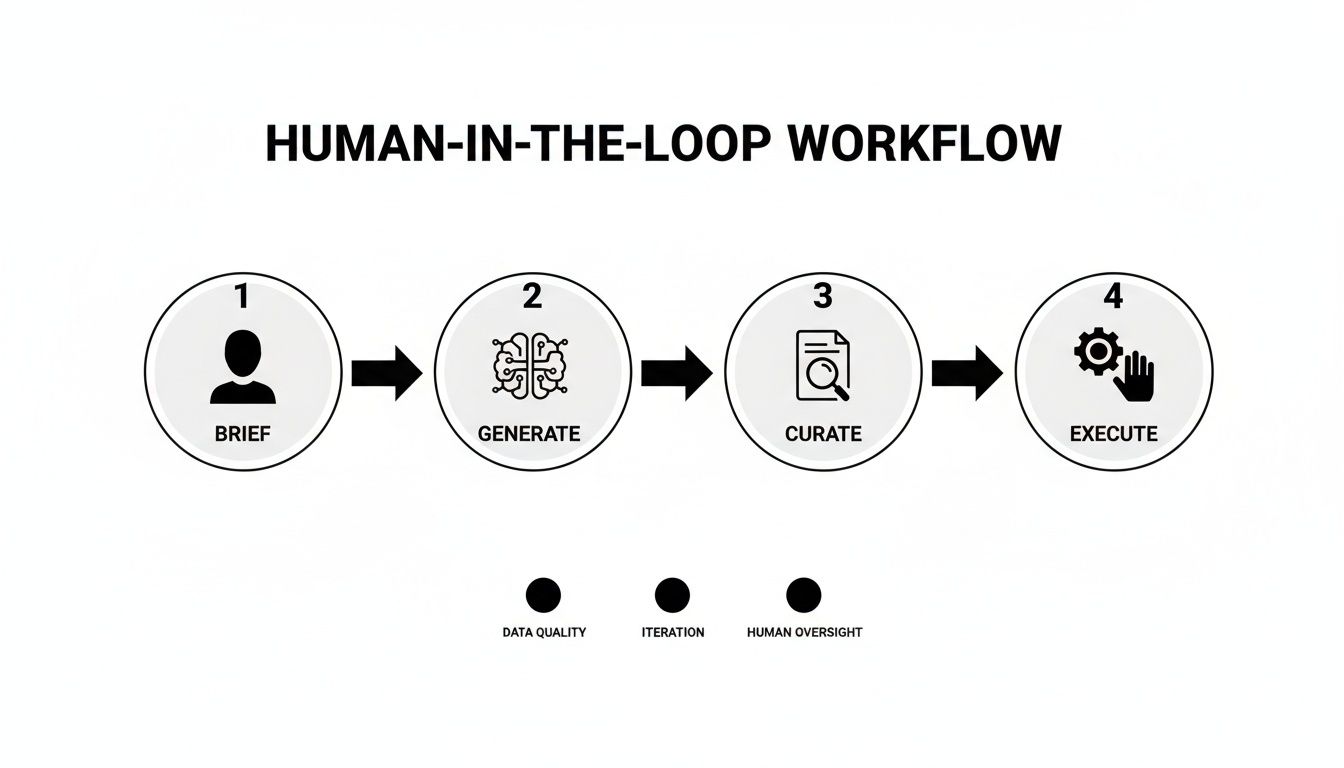

Implementing a Human-in-the-Loop Workflow

Even with the slickest prompts and richest context, technology alone isn't the answer. The real secret to fixing generic creative advice from an LLM is integrating genuine human expertise. A truly effective workflow doesn't try to replace human creativity; it supercharges it, turning the LLM from a flawed ghostwriter into a powerful creative collaborator.

We call this the "Sounding Board" approach. Instead of asking the AI to spit out a finished campaign, you use it as an infinite, tireless brainstorming partner. The goal is to generate a massive volume of raw, unfiltered ideas that a human creative director can then curate, refine, and elevate into something brilliant.

The Four Stages of Hybrid Creativity

This collaborative process isn't random; it's a clear, repeatable cycle. It’s a system designed to blend computational scale with human judgment, making sure the AI handles the grunt work of raw ideation while human strategists keep full control over quality, brand alignment, and the final creative vision.

The whole thing unfolds in four distinct phases:

- The Human Strategist Defines the Brief

It always starts with a person. A creative director or marketing lead crafts a detailed brief packed with the advanced prompts and context we've already covered. This isn't just asking a simple question; it's a strategic directive that sets the stage for what the AI needs to do. - The AI Generates Raw Ideas

With that strategic brief as its guide, the LLM goes to work, generating a wide spectrum of ideas. This is the divergence phase, where quantity trumps quality. An AI can churn out dozens of concepts—from the painfully safe to the wonderfully strange—in minutes. A human team would need hours, if not days. - The Human Creative Director Selects and Synthesizes

This is where the magic happens and, frankly, the most critical step. A human expert with taste and experience sifts through the AI's raw output. They toss out the generic duds, spot the promising sparks of originality, and often combine multiple half-baked ideas into a single, cohesive concept. This is where experience and brand knowledge create real value. - The Hybrid Team Executes the Vision

Once the human director has chosen and polished a core concept, the hybrid team takes over. The AI can be brought back in for tactical execution—writing copy variations, brainstorming shot lists, or drafting initial scripts—while human designers, animators, and editors bring the final vision to life.

This systematic approach gives you the best of both worlds. You get the raw speed and scale of AI ideation without sacrificing the nuance and strategic insight that only a human expert can provide.

AI-Only Workflow vs Human-in-the-Loop Workflow

The difference between a fully automated workflow and one with a human at the helm is night and day. The first approach chases speed at the expense of quality, while the second uses AI to enhance, not replace, the creative process. For any brand that wants to stand out instead of just adding to the noise, this distinction is everything.

"AI isn't replacing my thinking. I bring the insights, the strategy, the real life examples. Then, AI handles the research, writing mechanics, and formatting."

This nails the essence of the Human-in-the-Loop model. The human provides the "why," and the AI helps with the "how."

The table below breaks down just how different these two approaches are in practice. One treats the AI like a ghostwriter, hoping for a finished product. The other treats it like a sounding board, a tool for a skilled professional.

Ultimately, the definitive fix for generic AI creative is putting a human back in charge. This approach reframes the LLM as a powerful tool in the creative arsenal, not the artist itself. It’s how you ensure your creative output is not only fast and scalable but also smart, original, and deeply connected to your brand.

Common Questions About Getting Better Creative From AI

Jumping into generative AI can feel a bit like trying to speak a new language, especially when you're counting on it for creative work. As marketing and creative teams start weaving these tools into their daily flow, some common questions and hangups are bound to surface. I've gathered the big ones here to help you get unstuck and start producing less generic, more impactful ideas from your AI.

Which LLM Is the "Best" for Creative Work?

Honestly, there's no single "best" model—the field is just moving too fast. That said, we're consistently impressed by models like Anthropic's Claude 3 family (Opus, in particular) and OpenAI's GPT-4 series for their ability to handle nuanced, creative assignments.

But the real key is to test them against your specific needs. Some models are brilliant at whipping up short, witty ad copy, while others are powerhouses for developing complex narrative concepts for a campaign.

Instead of locking into one platform, try to maintain a small portfolio of models you can pit against your creative briefs. You'll quickly find one becomes your go-to for social media hooks, while another is perfect for drafting long-form video scripts.

This all feeds into a Human-in-the-Loop workflow, which is essential for blending strategic human oversight with the raw generative power of AI.

The idea is simple but powerful: a human strategist sets the goals, the AI generates a wide range of options, an expert curates the strongest ideas, and then a hybrid team brings the final vision to life.

How Do I Get the AI to Stop Being So Repetitive?

Seeing the same phrases or ideas pop up over and over? That's a classic sign of an under-prompted or "lazy" AI. When the model doesn't have enough specific direction or context, it just defaults to the safest, most common patterns it learned during training. The result is boring, redundant output.

The fix, nearly every time, is to get tighter with your constraints and add variety to your instructions. Give these tactics a try:

- Use Negative Constraints: Be explicit about what you don't want. For example: "Generate three headlines for a new coffee brand. Do not use the word 'discover' or the phrase 'unleash the flavor.'"

- Demand Different Formats: Ask for the same idea in multiple formats at once. Try something like, "Give me a tagline, a tweet, and a 3-bullet summary for this product concept." This forces the model to think more flexibly.

- Lean on the Persona Pattern: Tell the AI to adopt a specific persona for each batch of ideas, like "Generate ideas as a cynical Gen Z marketing intern" and then "Now, generate ideas as a seasoned, award-winning copywriter."

How Much Context Is Too Much?

It's tempting to think that dumping more context into a prompt will always lead to better results, but you can definitely hit a point of diminishing returns. Flooding a prompt with irrelevant details can actually confuse the model and water down your core instructions. The goal isn't to give the AI your company's entire life story; it's to give it the right information for the task at hand.

A good rule of thumb: provide just enough context to make the task completely unambiguous. For ad copy, that might just be your brand voice guide, target audience persona, and the key value prop. For a full campaign concept, you’ll want to add competitor notes and strategic goals.

Think of it like briefing a freelance creative—give them everything they need to succeed on this project, not a link to your company's entire history on Wikipedia.

You can find more practical tips like this in our other resources on AI for creative teams. My advice is to start with minimal context and only layer in more detail if the output is still missing the mark.